Models are still expensive to run, hard to use, and frequently wrong

“Should we automate away all the jobs, including the fulfilling ones?”

This is one of several questions posed by the Future of Life Institute’s recent call for a pause on “giant AI experiments,” which now has over 10,000 signatories including Elon Musk, Steve Wozniak, and Andrew Yang. It sounds dire—although maybe laced through with a little bit of hype—and yet how, exactly, would AI be used to automate all jobs? Setting aside whether that’s even desirable—is it even possible?

“I think the real barrier is that the emergence of generalized AI capabilities as we’ve seen from OpenAI and Google Bard is that similar to the early days when the Internet became generally available, or cloud infrastructure as a service became available,” says Douglas Kim, a fellow at the MIT Connection Science Institute. “It is not yet ready for general use by hundreds of millions of workers as being suggested.”

Even researchers can’t keep up with AI innovation

Kim points out that while revolutionary technologies can spread quickly, they typically fail to reach widespread adoption until they prove themselves through useful, easily accessible applications. He notes that generative AI will need “specific business applications” to move beyond a core audience of early adopters.

Matthew Kirk, the head of AI at Augment.co, has a similar view. “What I think is happening is similar to what happened in the early days of the Internet. It was an absolute mess of ideas, and no standards. It takes time and cooperation for human beings to settle on standards that people follow. Even something as mundane as measuring time is incredibly complicated.”

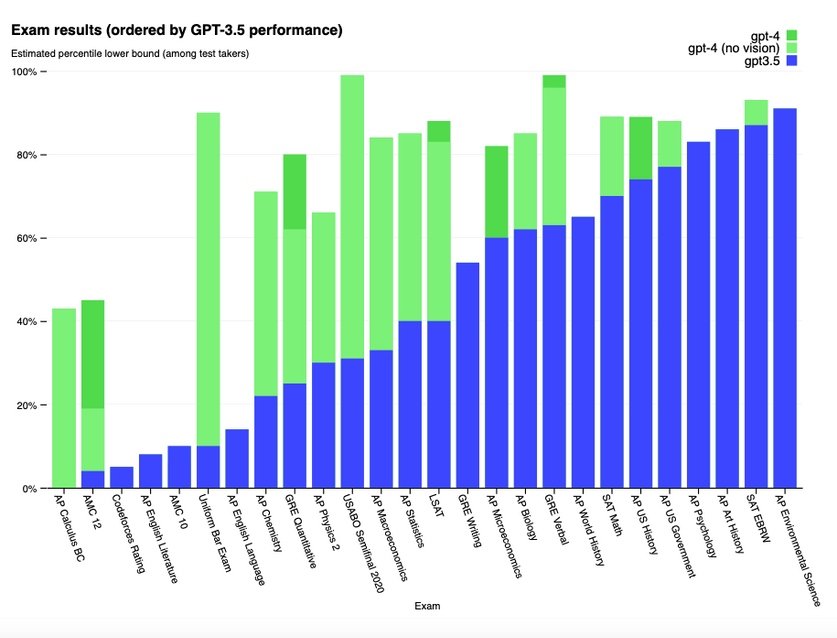

Standardization is a sore spot for AI development. The methods used to train the models and fine-tune the results are kept secret, making basic questions about how they function hard to answer. OpenAI has touted GPT-4’s ability to pass numerous standardized tests—but did the model genuinely understand the tests, or simply train to reproduce the correct answers? And what does that mean for its ability to tackle novel tasks? Researchers can’t seem to agree on the answer, or on the methods that might be used to reach a conclusion.

OpenAI’s GPT-4 can ace many standardized tests. Does it truly understand them, or was it trained on the correct answers?OPENAI

Even if standards can be agreed on, designing and producing the physical hardware required for the widespread use of AI-powered tools based on large-language models (LLMs) like GPT-4—or other generative AI systems—could prove a challenge. Lucas A. Wilson, the head of global research infrastructure at Optiver, believes the AI industry is in an “arms race” to produce the most complicated LLM possible. This, in turn, has quickly increased the compute resources required to train a model.

“The pace of innovation in the AI space means that immediately applicable computational research is now in advance of the tech industry’s ability to develop new and novel hardware capabilities, and so the hardware vendors must play catch-up to the needs of AI developers,” says Wilson. “I think vendors will have a hard time keeping up for the foreseeable future.”

Like you, AI won’t work for free

In the meantime, developers must find ways to deal with limitations. Training a powerful LLM from scratch can present unique opportunities, but it’s only viable for large, well-funded organizations. Implementing a service that taps into an existing model is much more affordable (Open AI’s ChatGPT-3.5 Turbo, for example, prices API access at roughly US $0.002 per 750 English words). But costs still add up when an AI-powered service becomes popular. In either case, rolling out AI for unlimited use isn’t practical, forcing developers to make tough choices.

“Generally, startups building with AI should be very careful with dependencies on any specific vendor APIs. It’s also possible to build architectures such that you don’t light GPUs on fire, but that takes a fair bit of experience,” says Hilary Mason, the CEO and cofounder of Hidden Door, a startup building an AI platform for storytelling and narrative games.

This is a screen capture of an AI-powered tool used to generate narrative games. It includes multiple characters and prompts that a user can select. HIDDEN DOOR

Most services built on generative AI include a firm cap on the volume of content they’ll generate per month. These fees can add up for businesses and slow down people looking to automate tasks. Even OpenAI, despite its resources, caps paying users of ChatGPT, depending on the current load: As of this writing, the cap is currently 25 GPT-4 queries every three hours. That’s a big problem for anyone looking to rely on ChatGPT for work.